The 5% Playbook: What AI Pilots Taught Me About Failure (and Real ROI)

Last month, MIT dropped a bombshell: 95% of enterprise AI pilots fail to deliver measurable financial impact. I've been in the trenches for the past year—sitting through painful executive reviews, watching promising projects crash and burn, and consulting on initiatives that seemed bulletproof on paper. But I've also witnessed the rare wins. Here's what separates the 5% who succeed from the 95% who don't—and why this failure rate might actually be telling us something important about how innovation works.

The Number Everyone's Talking About

Walk into any tech conference these days, and someone will inevitably mention it: 95%. The failure rate that's making C-suites nervous and investors skittish.

"Look," she said, "if we're this bad at AI, should we even be trying?"

Here's what I told her: It depends entirely on what you're calling failure.

If your idea of an AI pilot is building a chatbot to impress the board, then yes—you're almost guaranteed to fail. But if you're running focused experiments with clear paths to real workflow integration, then a 95% failure rate isn't a bug. It's a feature. It means you're ruthlessly cutting the fluff to find the few ideas that actually move the needle.

The Same Mistakes, Over and Over

Some patterns are so predictable you could set your watch by them.

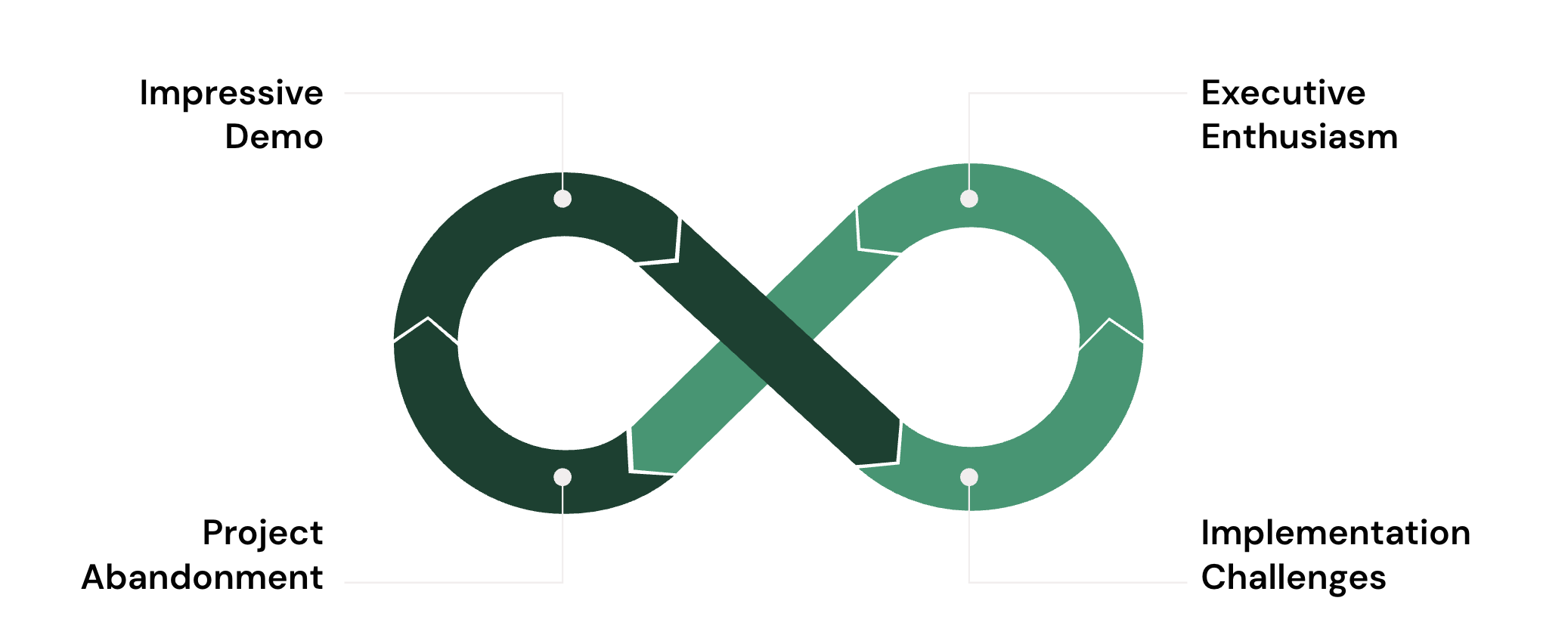

The Demo Trap. I've lost count of how many "AI strategies" I've seen that boil down to a single board presentation. Picture this: a slick demo where AI answers customer questions, everyone nods approvingly, and then... nothing happens for months. It's innovation as performance art, designed to check a box rather than solve a problem.

Buzzword Bingo. Pull up any corporate deck from the last two years and you'll see the same phrases: "AI-powered dashboards," "intelligent copilots," "AI-driven whatever." The projects that follow usually ignore the actual pain points people face every day in favor of whatever sounds impressive in a PowerPoint.

The Executive Gadget Problem. Here's something I see constantly: executives fall in love with sophisticated AI tools that end up gathering digital dust on their laptops. Meanwhile, the people who actually need to use these tools—the analysts, the support reps, the operations folks—never even see them.

The through line? AI gets treated like a side project for signaling progress instead of a system change for delivering real outcomes.

What Smart Companies Stopped Doing

The landscape is shifting, even if it's happening quietly.

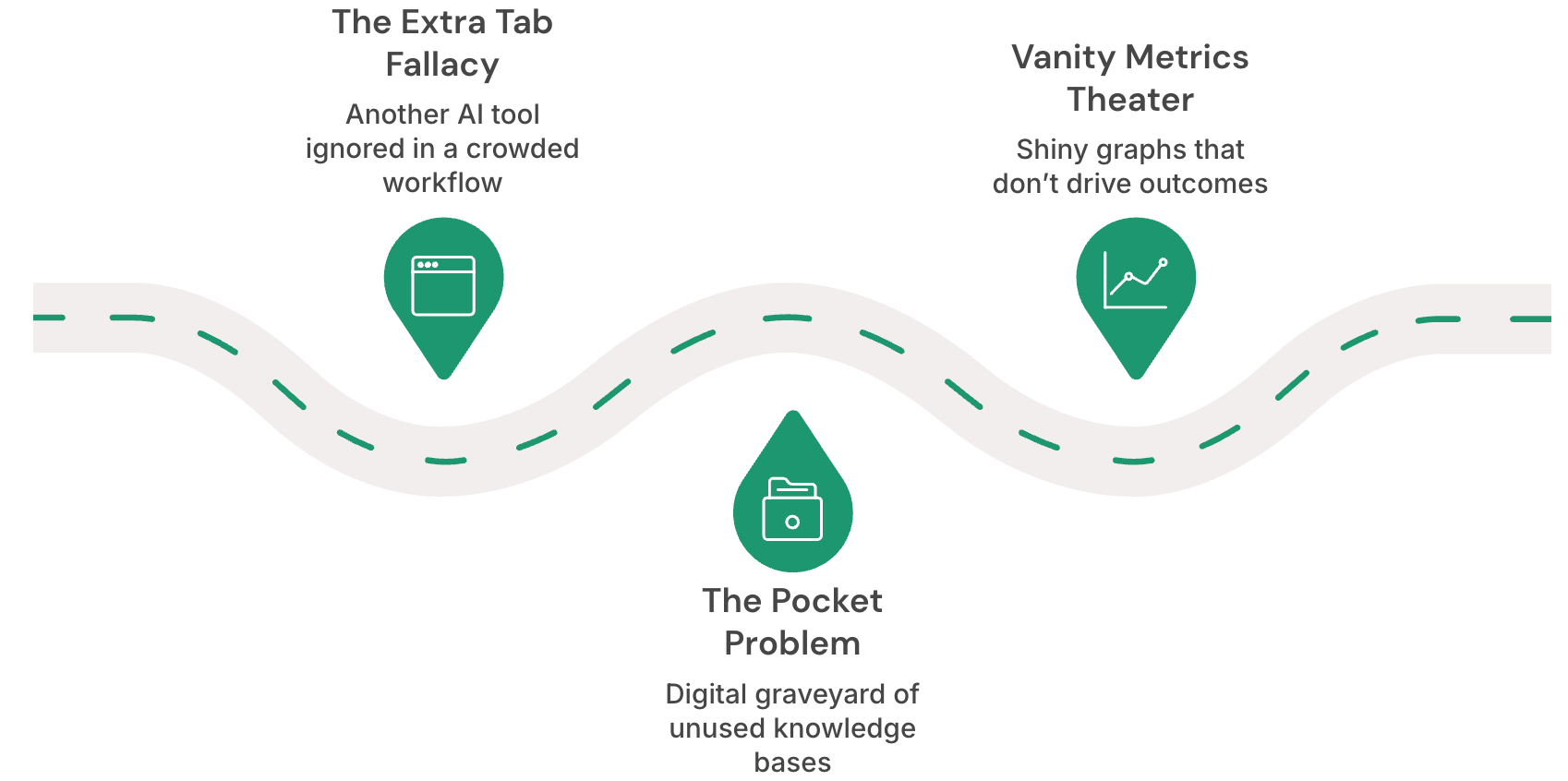

The Extra Tab Fallacy. For years, the default move was spinning up yet another tool. "Here's our AI knowledge base!" "Check out our new AI insights dashboard!" But here's the reality: people live in Salesforce, Jira, and ServiceNow. Every additional tab you force them to open is friction they'll avoid. By mid-2025, I started hearing CIOs admit what we all knew: those standalone tools never stuck.

The Pocket Problem. Remember all the excitement around AI knowledge bases and document libraries? They suffered from the same fate as Pocket—that app where you save articles you'll "definitely read later." Teams would dump documents into these AI systems and never come back. Without tight integration into actual workflows, they became expensive digital graveyards.

Vanity Metrics Theater. In 2023 and 2024, everyone was tracking "chat sessions" and "API calls." Boards loved seeing those usage graphs trending upward. But by this year, the questions got sharper: "This is great, but did it actually save us money?" Suddenly, those feel-good dashboards didn't seem so important.

The core issue isn't that AI is overhyped. It's that too many companies are deploying it as theater instead of plumbing.

When NOT to Do AI Pilots (Read This First)

The smartest thing some companies do is nothing. Before you start any AI initiative, honestly answer these questions:

Can You Measure the Problem? If you can't quantify what's broken, AI won't fix it. One consulting firm wanted to use AI to "improve client satisfaction." They couldn't define what that meant or how to measure it. I told them to solve that problem first, then come back to AI.

Is Your Data Infrastructure Functional? AI amplifies your data problems, it doesn't solve them. If you can't trust your current reports, adding AI will make things worse, not better. I've seen companies spend six months building beautiful AI models only to discover their source data was corrupted by a system migration years earlier.

Is Leadership Actually Aligned? AI pilots fail spectacularly when executives have different visions of success. I've watched six-month projects get killed because the CEO wanted cost savings and the COO wanted revenue growth, and nobody bothered to clarify the priority upfront.

Do You Have Change Champions? If you can't identify three people in your organization who are genuinely excited about this project and willing to advocate for it, don't start. AI adoption is a social process, not just a technical one. Without internal champions, even perfect technology sits unused.

Are You Prepared to Kill Bad Ideas? This is the big one. If you're not emotionally ready to shut down a project that isn't working, you'll end up in the 95%. The successful companies I've worked with kill more projects than they scale. That's not failure—that's good judgment.

If you answered "no" to any of these, stop. Fix those issues first. AI will still be here when you're ready.

What the 5% Actually Do Differently

The success stories I've seen aren't glamorous. They're not the kind of thing that gets you invited to speak at conferences. But they work.

Radical Focus. A European bank didn't try to "revolutionize finance with AI." They picked one specific pain point: invoice approvals that were taking 14 days and tying up millions in working capital. By cutting that cycle time to 2 days, they freed up cash flow that immediately hit the bottom line. Boring? Absolutely. Effective? You bet.

Integration Over Innovation. A SaaS company tried launching a standalone chatbot. It flopped. Then they embedded the same AI model directly into Zendesk, where their support team already lived. Suddenly, ticket resolution time dropped 40%. Same technology, different placement, completely different outcome.

Operators in the Driver's Seat. Here's something I've learned: when pilots are run by executives, adoption dies. When frontline teams own the tools, usage explodes. One healthcare system let nurses design their own AI-assisted intake workflow. Within two months, 90% of the nursing staff was using it daily.

Smart Partnerships. The 5% rarely try to build everything from scratch. They partner with vendors who already understand their compliance requirements, security constraints, and workflow realities. They're not trying to be AI companies—they're trying to be better at their actual business.

Obsessive ROI Tracking. Every successful project I've seen has hard numbers attached: hours saved, errors reduced, revenue captured. No vanity metrics, no fuzzy benefits. Just clear deltas that show up on financial statements.

Real Stories from the Trenches

Retail Reality Check. A global retailer launched AI pilots across marketing, logistics, and sales. Most went nowhere. But their AI-assisted demand forecasting in one region? That cut stockouts by 8% and boosted seasonal revenue by millions. The secret sauce wasn't the AI—it was the narrow scope and clear measurement.

Banking Breakthrough. One bank automated regulatory filings using AI copilots. Not exactly headline-grabbing stuff, but it halved preparation time and virtually eliminated audit risks. The project quietly scaled across their entire compliance organization because it solved a real, expensive problem.

Healthcare Hit and Miss. A large provider failed spectacularly with a chatbot pilot. Later, they embedded AI triage directly into their existing patient portal. Intake time dropped 30%, and this time it actually stuck—because it lived inside the workflow people were already using.

SaaS Success. A knowledge hub project crashed and burned. But when the same company integrated AI into their support ticket system, handle times fell and costs plummeted. The technology was identical. The placement made all the difference.

Industry-Specific Realities

AI adoption doesn't look the same everywhere, and the 5% tailor their approach accordingly.

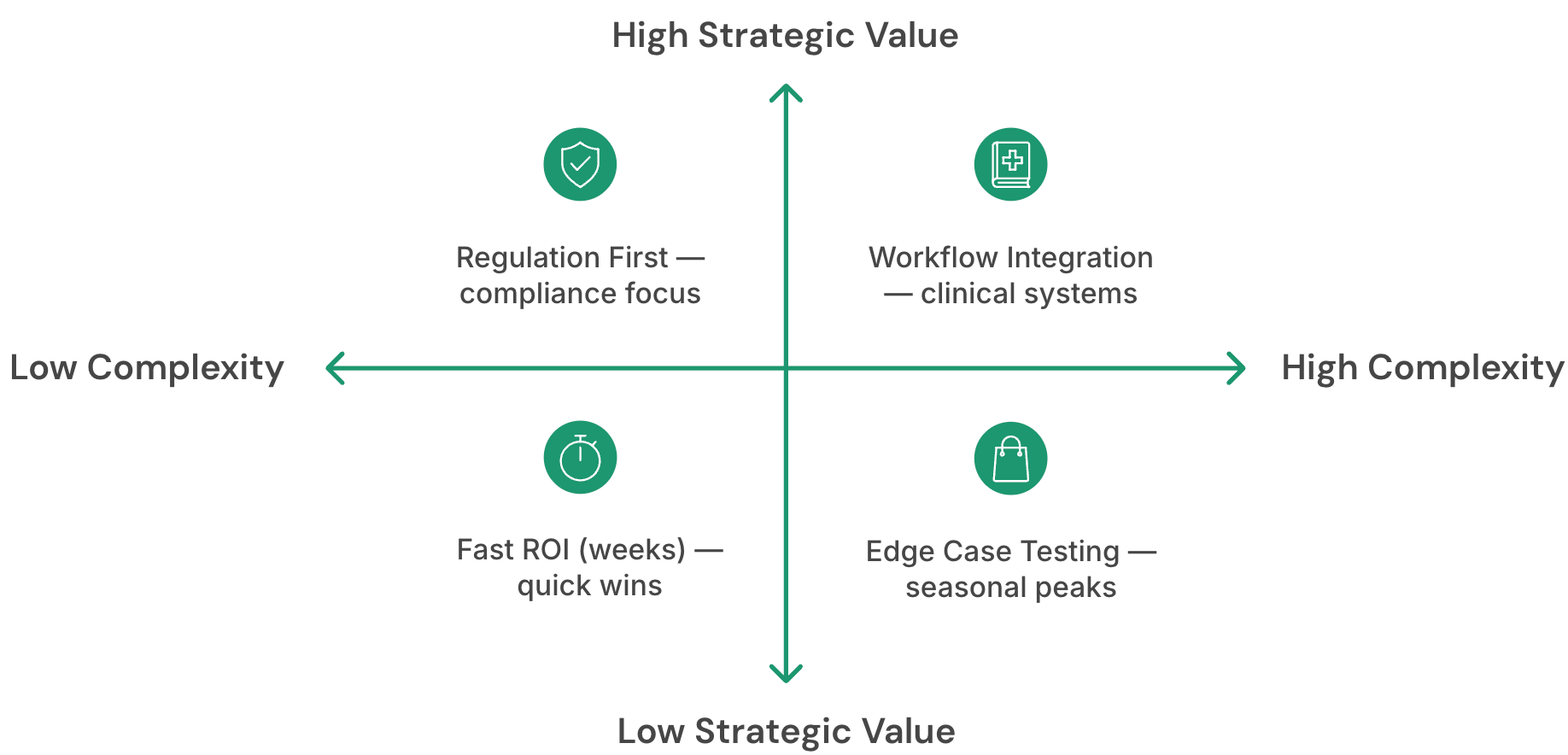

Financial Services: Regulation First. Banks and insurers that succeed start with compliance, not capabilities. They build AI that auditors can understand before they build AI that does anything impressive. One major bank I worked with spent eight months just documenting their AI decision-making process for regulators. That documentation became their competitive moat—they could deploy new models faster than competitors because regulators already trusted their process.

Healthcare: Workflow Integration or Nothing. Healthcare AI projects live or die based on how well they fit into existing clinical workflows. The successful ones don't ask doctors to change how they work—they augment what doctors already do. Epic integration isn't optional; it's the starting point.

Manufacturing: ROI in Weeks, Not Months. Manufacturing companies have no patience for long payback periods. The AI projects that stick reduce downtime, cut waste, or improve quality within 30 days of deployment. One automotive supplier I know killed six AI pilots before finding one that reduced defect rates by 12% in week three. That's the one they scaled.

Retail: Seasonal Proof Required. Retail AI has to survive Black Friday. I've seen beautiful demand forecasting models that worked perfectly in January and crashed spectacularly during holiday peaks. The survivors build for edge cases from day one.

Professional Services: Billable Hour Impact. Consulting and legal firms measure AI success differently: does it increase billable utilization or reduce non-billable admin time? One law firm's contract review AI didn't make lawyers faster—it freed up junior associates to work on higher-value tasks. Same hours, better margins.

The Money Trail: What Budgets Really Look Like

Enterprises are shifting how they budget for and deploy AI in 2025, and the successful ones follow predictable patterns.

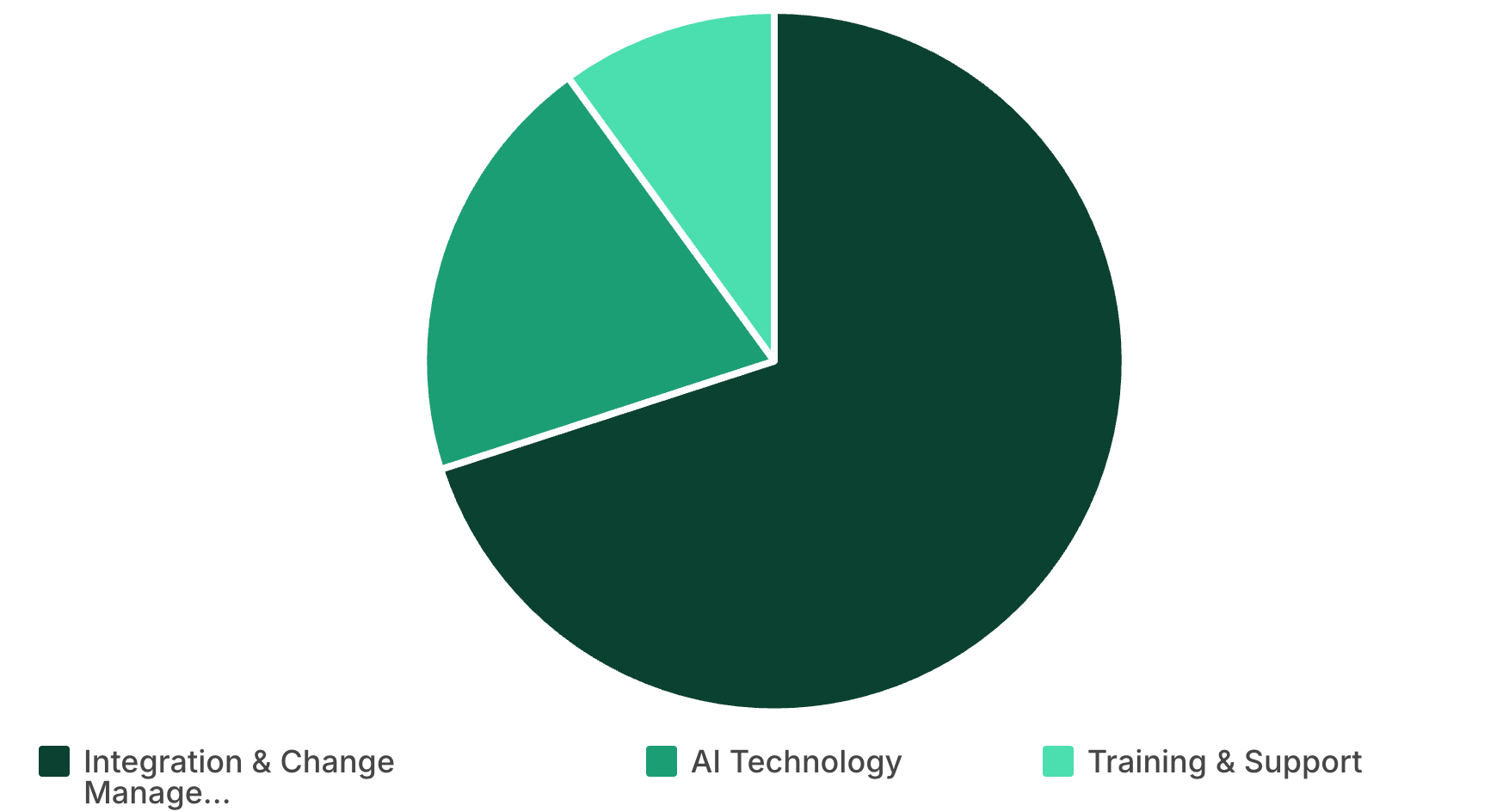

The 70-20-10 Rule. The winners allocate roughly 70% of their AI budget to integration and change management, 20% to the actual AI technology, and 10% to training and support. Most companies flip this completely backward.

A manufacturing client spent $2M on a state-of-the-art predictive maintenance platform and $50K on integration. Guess what didn't work? The next year, they reversed the ratio and suddenly had a system their technicians actually used.

Pilot Budgeting Reality. Here's what actually works:

Phase | Budget | Timeline |

Discovery Phase | $25-50K | 6-8 weeks |

MVP Build | $75-150K | 12-16 weeks |

Integration Phase | $100-300K | 6-12 months |

Scale Preparation | $200-500K | Varies |

The companies that succeed budget for failure. They plan for 3-4 pivots during the pilot phase and reserve 30% of their budget for unexpected integration costs.

Hidden Cost Categories. The real money isn't where you think:

- Data Engineering: Often 60% of total project cost

- Security Compliance: Can double timeline and budget for regulated industries

- Change Management: Most expensive part if you do it right, most expensive mistake if you don't

- Vendor Lock-in Mitigation: Planning for platform switches before you need them

ROI Reality Check. Successful AI projects typically break even in 6-18 months, not 3-5 years. If your financial model depends on benefits that won't materialize for years, you're probably in the 95%.

The Technical Reality Nobody Talks About

While everyone debates models and algorithms, the 5% who succeed are solving much more mundane problems.

Data Quality Hell. Nearly 96% of organizations face data quality issues, and it's killing pilots before they start. I watched a retail giant spend six months building a demand forecasting model, only to discover their inventory data had been corrupted by a system migration two years earlier. The AI was perfect—the data was garbage.

The survivors obsess over data lineage. One pharmaceutical company I worked with spent their first month just mapping where their clinical trial data comes from. Boring? Absolutely. But it's why their AI compliance tool actually works while their competitors' chatbots sit unused.

The Model Drift Trap. Model drift can negatively impact performance, resulting in faulty decision-making, and it happens faster than anyone expects. A financial services client launched an AI fraud detector that worked beautifully for three months. Then fraudsters adapted, and suddenly the model was missing 60% of cases.

The 5% build monitoring from day one. They don't just track accuracy—they track data distribution shifts, concept drift, and business metric correlation. One insurance company I know has alerts that fire when their claim processing model's confidence scores start trending downward. Not glamorous, but it's saved them millions.

Integration Nightmares. The graveyard of AI pilots is littered with projects that worked perfectly in isolation but couldn't talk to existing systems. Computational and data collection costs and privacy and data security compound when you're trying to retrofit AI into legacy infrastructure.

Smart companies audit their API ecosystem before they build anything. They map data flows, identify chokepoints, and design AI that fits their architecture, not the other way around.

The Latency Problem. AI that takes 30 seconds to respond might be acceptable for strategic analysis. It's useless for customer service or manufacturing quality control. One automotive company's defect detection AI was 99% accurate but too slow for the production line. They had to completely redesign the approach.

The Change Management Playbook the 5% Use

Scaling AI transforms isolated pilot programs through systematic change management that most companies completely botch.

The Operator-First Strategy. Successful AI implementations start with the people who will actually use the tools, not the executives who will sponsor them. One logistics company I worked with formed their AI steering committee entirely of warehouse supervisors and dispatchers. No VPs, no directors. The adoption rate hit 85% within six weeks because the system solved problems operators actually had.

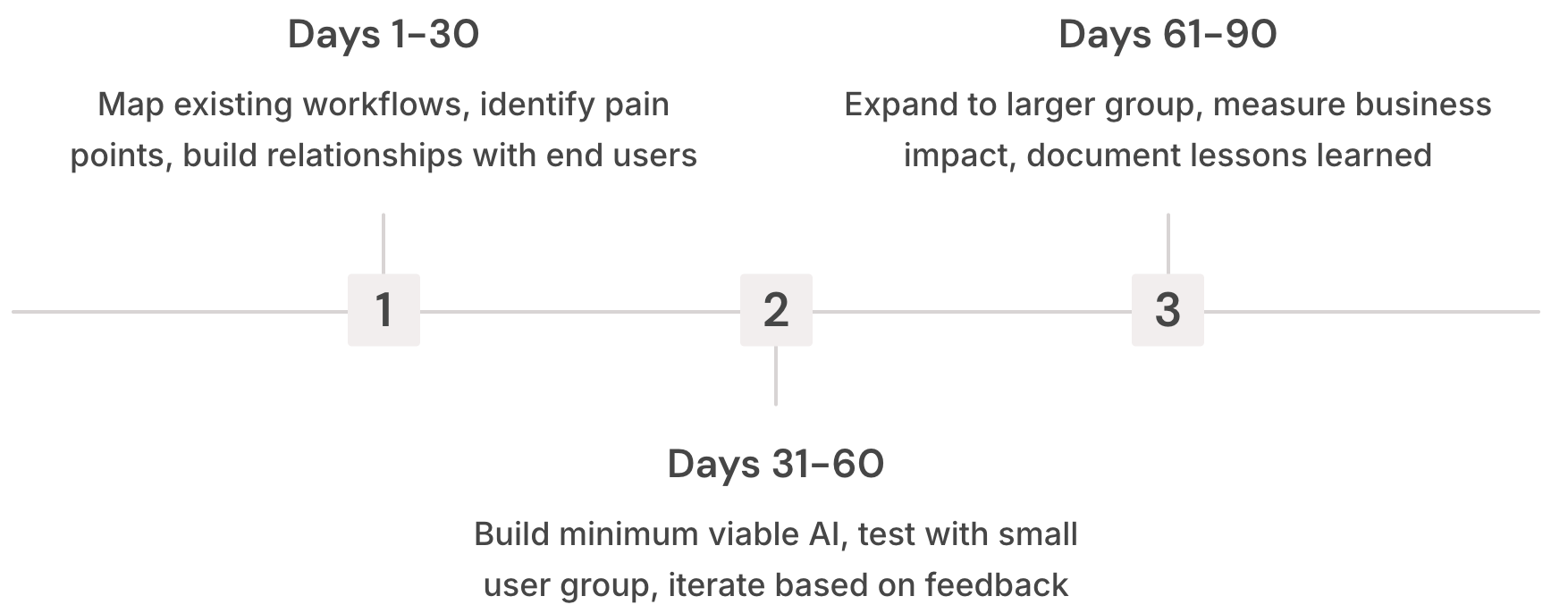

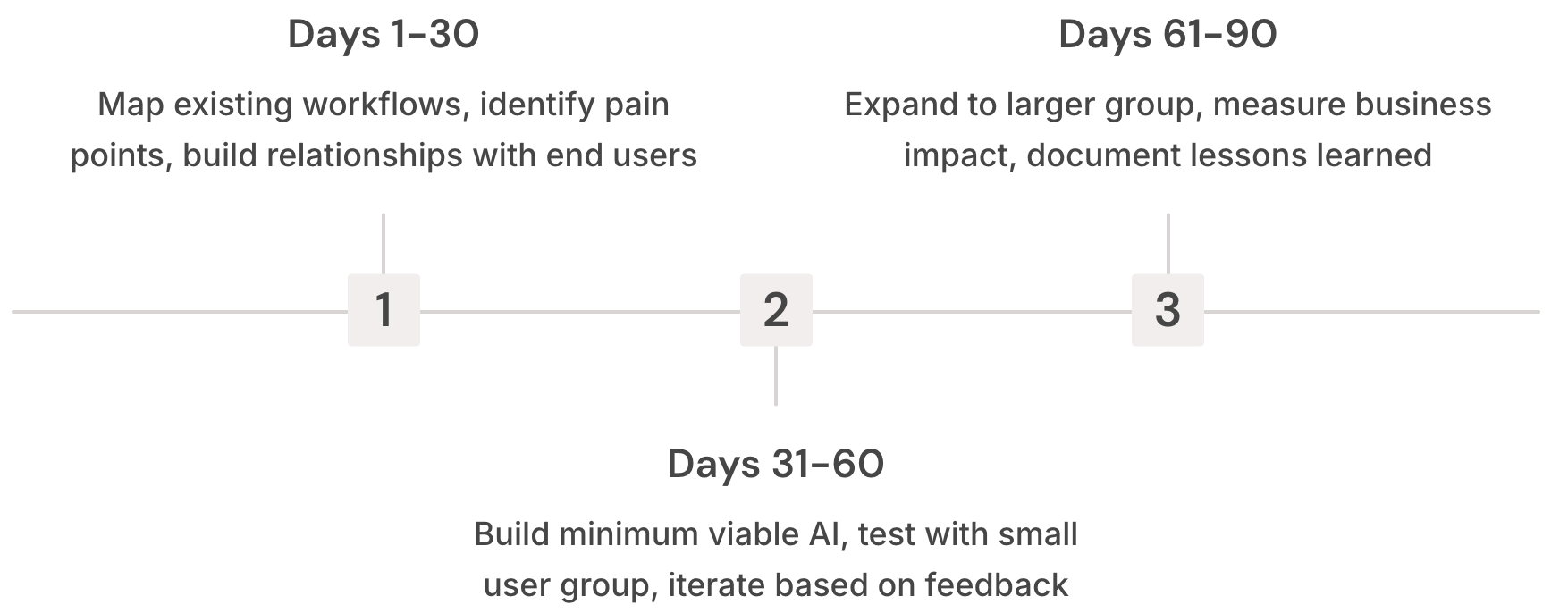

The 90-Day Proof Pattern. The 5% structure their pilots around 90-day proof cycles:

After 90 days, they have clear data on whether to kill, pivot, or scale. No emotional attachment, no sunk cost fallacy—just numbers.

The Training That Actually Works. Forget classroom sessions and online modules. The companies that succeed use peer-to-peer learning. They identify early adopters, make them experts, then have them train their colleagues. One healthcare system trained five nurses intensively, then had those five train the rest of their units. Knowledge transfer was 3x faster than traditional methods.

Managing Resistance. AI adoption threatens people's sense of job security, even when it shouldn't. The successful implementations address this head-on. They start by automating the tasks people hate most, not the tasks that define their expertise. One accounting firm used AI to handle data entry and formatting, freeing CPAs to focus on analysis and client relationships. Instead of fear, they got gratitude.

Why 95% Failure Might Be Exactly Right

Here's a contrarian take: maybe 95% failure is exactly what we should expect.

Think back to the early days of cloud computing. Companies ran countless pilots in 2008 and 2009. Most failed. But the 5% that worked established patterns that everyone else could follow. Those early failures weren't waste—they were R&D for the entire industry.

Same story with mobile apps in 2010. Most were complete duds. But the handful that succeeded showed everyone what was possible and reshaped entire industries.

High failure rates are how industries learn. The danger isn't the 95% failure rate. The danger is pretending those failures are successes, or worse, not learning from them.

The Learning Compound Effect. Each failure teaches the industry something valuable. Early AI pilots failed because of data quality issues—now data engineering is a standard first step. They failed because of user adoption problems—now change management is part of every serious AI budget. They failed because of integration complexity—now API-first design is table stakes.

The Pattern That's Emerging

AI adoption is following the same curve as every major platform shift:

Experimentation Phase (2023-2025): Scattered pilots, sky-high failure rates, lots of learning.

Pattern Recognition Phase (2026-2027): Clear playbooks emerge from the winners, vendors start productizing best practices.

Standardization Phase (2027-2029): CIOs enforce templates, procurement gets streamlined, risk profiles become predictable.

Differentiation Phase (2029+): Leaders pull ahead by combining AI with their unique data, culture, and market position.

If 2023-2025 was "AI theater," then 2026-2027 will be the grind of "AI plumbing"—less flashy, more foundational, ultimately more valuable.

The Next Wave of Failures (And How to Avoid Them)

Based on what I'm seeing in early 2026, here are the failure patterns emerging:

AI-Washing Existing Products. Companies are slapping "AI-powered" labels on basic automation and calling it innovation. The market is getting smarter about this. Customers are starting to ask harder questions about what the AI actually does.

Multi-Model Complexity. Organizations are building Frankenstein systems with six different AI models that don't talk to each other. Integration costs are exploding, and troubleshooting becomes impossible. The survivors are consolidating around fewer, more capable platforms.

Prompt Engineering Theater. Companies are hiring "prompt engineers" to optimize ChatGPT conversations instead of building real AI capabilities. It's the new version of having a "social media manager" in 2010—a role that feels important but doesn't scale.

The Subscription Model Trap. AI-as-a-Service costs that seem reasonable for pilots become crushing at scale. One company I know is spending $2M annually on AI subscriptions for tools that save them $500K in labor costs. The math doesn't work.

Governance Lag. Companies that move fast on AI pilots without building governance frameworks are creating compliance nightmares. When regulators start auditing AI decisions—and they will—many organizations will discover they can't explain how their models work or why they made specific decisions.

The pattern is always the same: what looks smart in the pilot phase becomes expensive and unwieldy at scale. The 5% plan for this from day one.

The Questions That Actually Matter

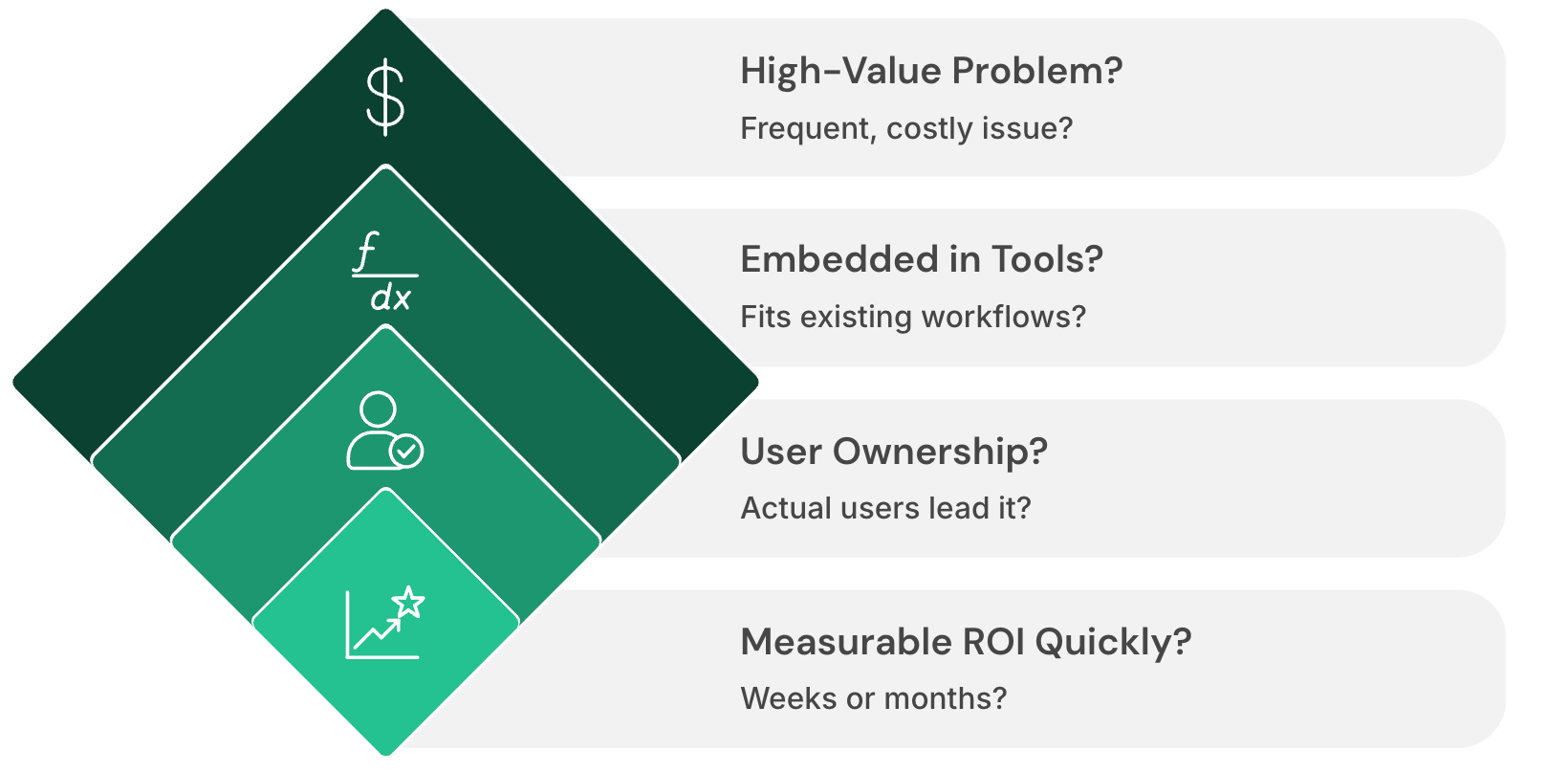

If you're running AI projects today, stop asking "Which model should we use?" Start asking:

- Does this solve a frequent, expensive business problem that people complain about every day?

- Will this live inside tools people already use, or am I forcing them to learn something new?

- Do the people who actually do this work own this initiative, or just the executives?

- Can I measure ROI in weeks or months, not quarters or years?

- Am I emotionally prepared to kill this project if it doesn't deliver?

- What happens when this scales 10x? Will the economics still work?

- How will I explain this AI's decisions to an auditor or regulator?

These questions are what separate the 95% from the 5%.

The Real Takeaways

95% of AI pilots fail—not because AI is broken, but because most organizations approach it wrong.

The 5% who win aren't using better models or spending more money. They win with boring specificity: tight focus, workflow integration, operator ownership, smart vendor partnerships, and obsessive ROI measurement.

Failure at this scale isn't fatal—it's the natural pruning mechanism that every transformative technology needs.

The future belongs to companies that master AI plumbing, not AI theater.

The organizations that figure this out first won't be the ones making the most noise about AI. They'll be the ones quietly solving expensive problems, measuring everything, and building competitive advantages that compound over time.

The playbook is sitting right there in the 5% who are already winning. The question is: are you willing to be boring enough to join them?