RAG vs. Fine-Tuning vs. Agents: The Non-Dogmatic Buyer's Guide

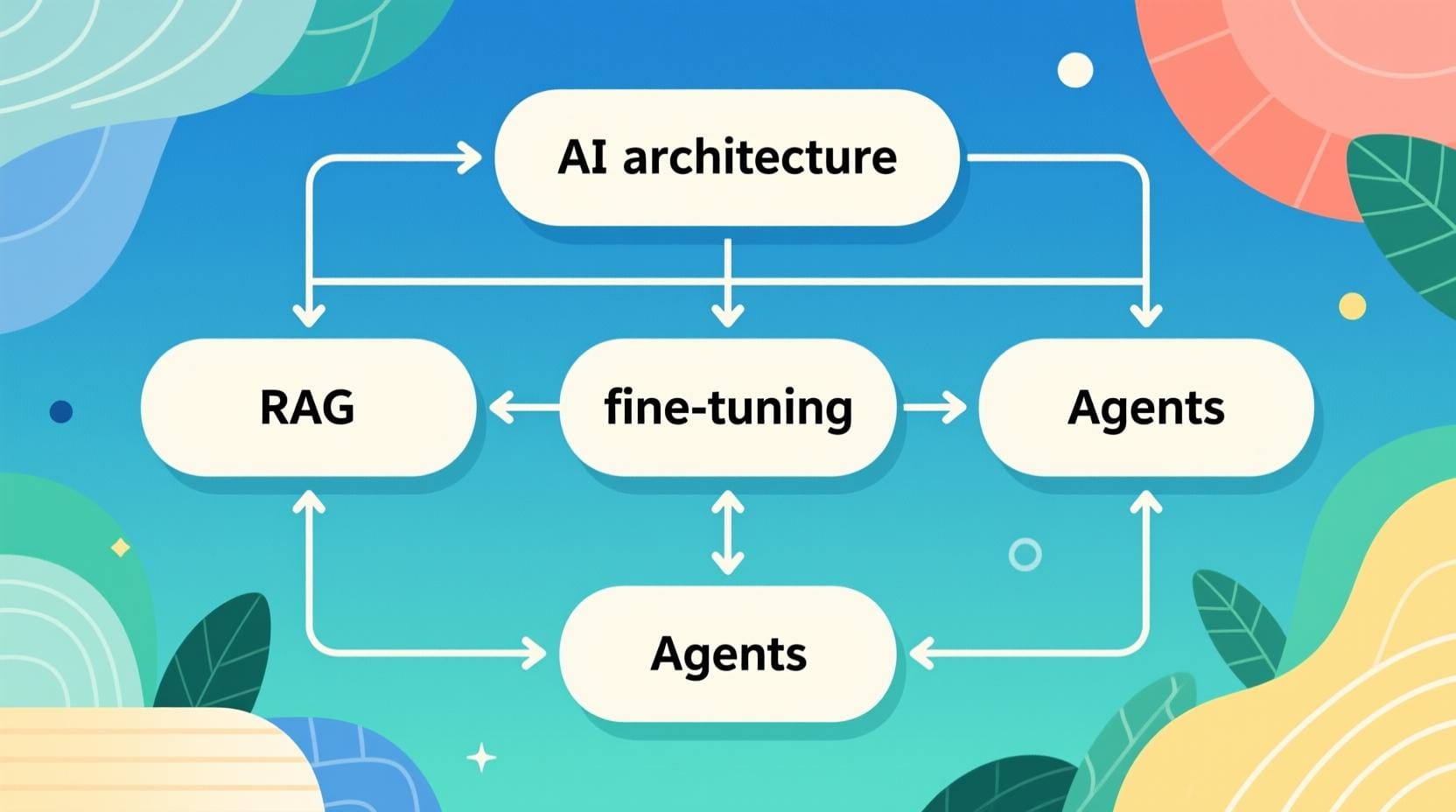

A practical decision framework for choosing the right AI approach without the religious wars

The $50,000 Question in a Conference Room

Picture this: You're sitting in a strategy meeting. The VP of Engineering just finished explaining why fine-tuning is the only serious approach. The Head of Data Science counters that RAG is obviously superior for knowledge management. Meanwhile, the Innovation Director insists that AI agents are the future and everything else is legacy thinking.

Sound familiar?

This scene plays out in companies worldwide every day. Teams treat their AI architecture choices like religious denominations – "we're a RAG shop" or "we only fine-tune" or "agents are the only way forward." The result? Expensive mistakes, missed opportunities, and solutions that fight against business requirements instead of serving them.

The truth is simpler and more nuanced: Each approach has distinct strengths, and the best organizations use them strategically rather than dogmatically.

The Problem with AI Architecture Dogma

The AI implementation landscape suffers from what I call "hammer syndrome" – when you have a favorite tool, everything looks like a nail. This creates three common failure patterns:

The RAG Evangelists believe external knowledge retrieval solves everything. They build elaborate vector databases for problems that would be solved better with a simple fine-tuned model.

The Fine-Tuning Purists want to bake all knowledge into model weights. They spend months training domain-specific models that become obsolete the moment new information emerges.

The Agent Maximalists see autonomous AI as the answer to every workflow. They architect complex multi-agent systems for simple tasks that could be handled with basic API calls.

Each group has valid points, but their exclusive thinking costs businesses time, money, and competitive advantage. While some predicted 2024 would see more AI adoption by enterprises, that didn't play out as budgets remained constrained and AI tech often remained in the "experimental" category.

The real question isn't which approach is "best" – it's which approach fits your specific context, constraints, and goals.

RAG: The Knowledge Swiss Army Knife

Retrieval-Augmented Generation connects language models to external knowledge bases, enabling real-time information access without modifying model weights.

RAG's Core Strengths

Information Freshness: RAG excels at providing up-to-date information seamlessly. If there's new data like today's news or a new company policy, a RAG-based solution can immediately use it to answer questions by retrieving it. No retraining required.

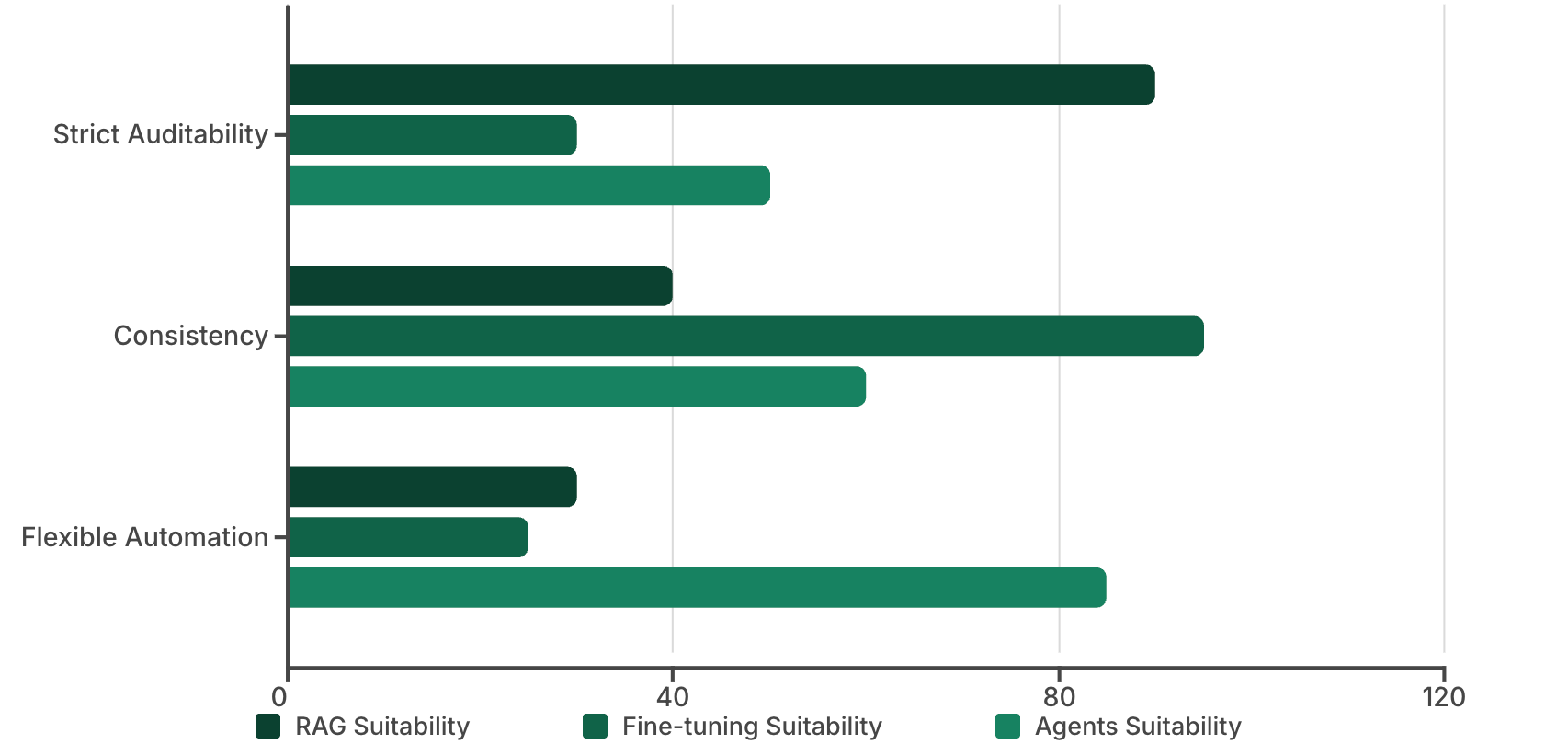

Compliance and Auditability: Every RAG response can trace back to specific source documents. When a medical AI cites a study or a legal assistant references a statute, you know exactly where that information originated.

Cost Efficiency: Building a RAG system requires infrastructure investment upfront, but ongoing costs remain predictable. You're not paying for expensive model retraining cycles every time your knowledge base updates.

Governance Control: RAG systems excel in regulated environments where information lineage matters. You can update, remove, or restrict access to specific documents without touching the underlying model.

RAG's Limitations

Retrieval Quality Bottleneck: RAG is only as good as its retrieval system. Poor chunking strategies, inadequate embeddings, or weak search algorithms will surface irrelevant information, leading to hallucinated or incorrect responses.

Latency Overhead: With fine-tuned models, everything is handled within the pre-trained model, meaning responses are generated instantly without external lookups. RAG systems must first retrieve relevant documents, then generate responses, adding measurable latency.

Context Window Limitations: Retrieved information must fit within the model's context window alongside the user query and conversation history. For complex questions requiring multiple sources, this becomes a real constraint.

RAG Works Best For:

- Knowledge Management Systems: Corporate wikis, documentation platforms, research databases

- Customer Support: FAQ systems, policy lookups, troubleshooting guides

- Compliance-Heavy Industries: Healthcare, legal, financial services where auditability is crucial

- Rapidly Changing Information: News, market data, inventory systems

Fine-Tuning: The Specialist's Precision Tool

Fine-tuning adjusts pre-trained model weights using domain-specific data, creating specialized models optimized for particular tasks or domains.

Fine-Tuning's Core Strengths

Domain Specialization: For highly specialized tasks, a fine-tuned model often outperforms a general model using RAG because it has deeply internalized the domain's patterns. A model trained on medical literature will understand clinical terminology in ways that RAG retrieval cannot match.

Consistent Tone and Style: Fine-tuning excels at embedding specific communication patterns, brand voice, or professional standards directly into model behavior. Customer service models can maintain consistent tone across thousands of interactions.

Zero Latency Penalty: Once fine-tuned, the model's response time is not impacted by a retrieval step. For high-frequency, low-latency applications, this performance advantage is crucial.

Offline Capability: Fine-tuned models work without external dependencies. Once deployed, they function independently of databases, APIs, or network connectivity.

Fine-Tuning's Limitations

Information Staleness: A fine-tuned model remains limited to what it saw during its last training session. New information requires a complete retraining cycle, making fine-tuned models unsuitable for rapidly evolving knowledge domains.

High Computational Costs: Training and maintaining fine-tuned models requires significant GPU resources, especially for larger models. Costs scale with model size and training frequency.

Brittleness with Distribution Shift: Fine-tuned models can perform poorly when encountering inputs that differ from their training distribution. A model trained on formal business writing might struggle with casual customer messages.

Version Control Complexity: Managing multiple versions of fine-tuned models across development, staging, and production environments creates operational overhead that RAG systems avoid.

Fine-Tuning Works Best For:

- Customer Support: Tone consistency, domain-specific responses, brand voice maintenance

- Content Generation: Marketing copy, technical writing, creative content with specific style requirements

- Specialized Analysis: Medical diagnosis support, legal document review, financial analysis

- Edge Computing: Applications requiring offline functionality or minimal latency

Agents: The Orchestration Powerhouse

AI agents combine language models with the ability to take actions, use tools, and make decisions across multi-step workflows.

Agents' Core Strengths

Workflow Automation: Agents excel at chaining together complex sequences of actions. They can research a topic, draft a document, send it for review, incorporate feedback, and schedule publication – all with minimal human oversight.

Dynamic Decision Making: Unlike conventional software systems that follow strict, rule-based programming, AI agents use machine learning algorithms to analyze data and determine actions based on probabilities. This enables adaptive responses to changing conditions.

Tool Integration: Modern agents can seamlessly interact with APIs, databases, external services, and other software systems, creating powerful automation possibilities that simple language models cannot achieve.

Scalable Problem Solving: Agents can handle multiple concurrent tasks, delegate work to specialized sub-agents, and coordinate complex operations that would overwhelm traditional automation approaches.

Agents' Limitations

Unpredictability and Control: Agentic AI will introduce ramifying, non-linear risks of unintended consequences, biases, and potential harm. The same flexibility that makes agents powerful can lead to unexpected behaviors in production environments.

Governance Complexity: New roles must be introduced, such as prompt engineers to refine interactions, agent orchestrators to manage agent workflows, and human-in-the-loop designers to handle exceptions and build trust. The operational overhead of managing agents is significant.

Error Amplification: When agents make mistakes, those errors can cascade through multiple systems and actions before humans detect them. A single incorrect decision can trigger expensive or damaging downstream effects.

Resource Consumption: Agents often make multiple API calls, access various services, and maintain complex state. This can lead to unpredictable cost spikes, especially with usage-based pricing models.

Agents Work Best For:

- Operations Automation: DevOps workflows, system monitoring, incident response

- Research and Analysis: Market research, competitive intelligence, data analysis pipelines

- Content Workflows: Multi-step content creation, review, and publication processes

- Integration Projects: Connecting disparate systems and automating cross-platform workflows

The Decision Framework: Beyond the Hype

Instead of religious adherence to a single approach, successful AI implementations require strategic thinking about three key dimensions:

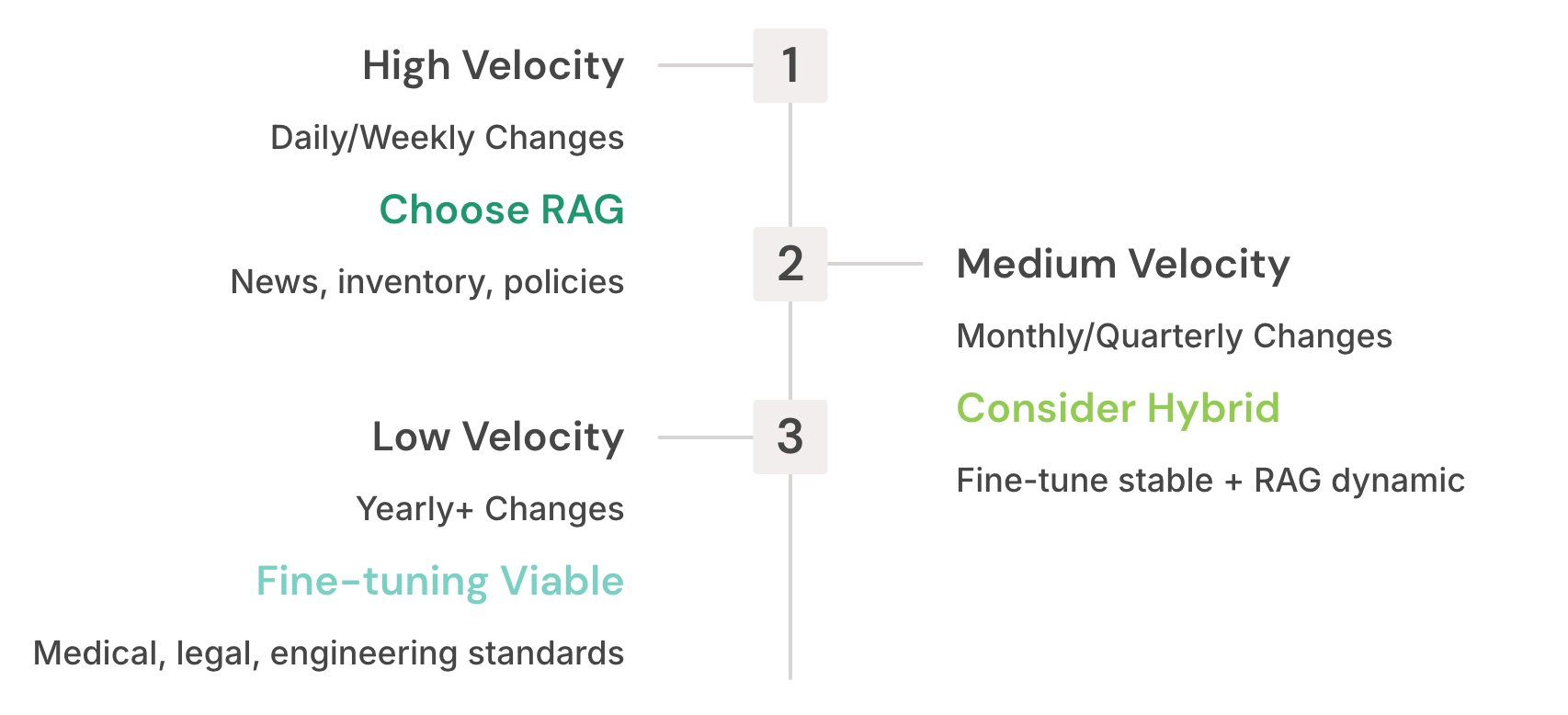

Dimension 1: Information Velocity

Dimension 2: Governance Requirements

Dimension 3: Cost Structure

Upfront vs. Ongoing Costs: RAG requires infrastructure investment but predictable ongoing costs. Fine-tuning has high training costs but lower inference costs. Agents have variable costs tied to usage and complexity.

Scale Economics: Fine-tuning outperforms RAG when addressing slow-to-change challenges, such as adapting the model to a particular domain or set of long-term tasks. RAG outperforms fine-tuning on quick-to-change challenges.

Decision Tree Framework

Use this practical decision tree:

- Do you need real-time information or frequent updates?

- Yes → RAG or Hybrid

- No → Consider fine-tuning

- Do you need to trace every response to source documents?

- Yes → RAG

- No → Continue

- Do you need to automate multi-step workflows?

- Yes → Agents (with RAG/fine-tuning components)

- No → Continue

- Is consistent tone/style more important than information freshness?

- Yes → Fine-tuning

- No → RAG

- Do you have budget for ongoing training cycles?

- Yes → Fine-tuning viable

- No → RAG or pre-trained models

Real-World Case Snapshots

Case 1: B2B SaaS Knowledge Base (RAG Victory)

Challenge: Mid-stage SaaS company with 500+ help articles, constant product updates, and compliance requirements.

Solution: RAG system with vector database, automated document ingestion, and source citation.

Results: 40% reduction in support ticket volume, 2-hour information freshness (vs. weeks with fine-tuning), full audit trail for compliance.

Why RAG Won: High information velocity, strict traceability requirements, predictable cost structure.

Case 2: Financial Services Content Generation (Fine-Tuning Victory)

Challenge: Investment firm needed consistent, compliant communication for client reports with specific regulatory tone requirements.

Solution: Fine-tuned model on 10,000+ historical reports, regulatory guidelines, and approved language patterns.

Results: 60% faster report generation, 90% reduction in compliance review cycles, consistent brand voice across all outputs.

Why Fine-Tuning Won: Stable domain knowledge, style consistency priority, offline deployment requirements.

Case 3: Manufacturing Operations (Agent Victory)

Challenge: Large manufacturer needed automated incident response coordinating multiple systems, teams, and workflows.

Solution: Multi-agent system with specialized agents for monitoring, diagnosis, escalation, and documentation.

Results: 70% faster incident resolution, 24/7 automated response capability, integrated workflow across 15+ systems.

Why Agents Won: Complex multi-step automation, system integration requirements, dynamic decision-making needs.

The Non-Dogmatic Path Forward

The most successful AI implementations combine multiple approaches strategically:

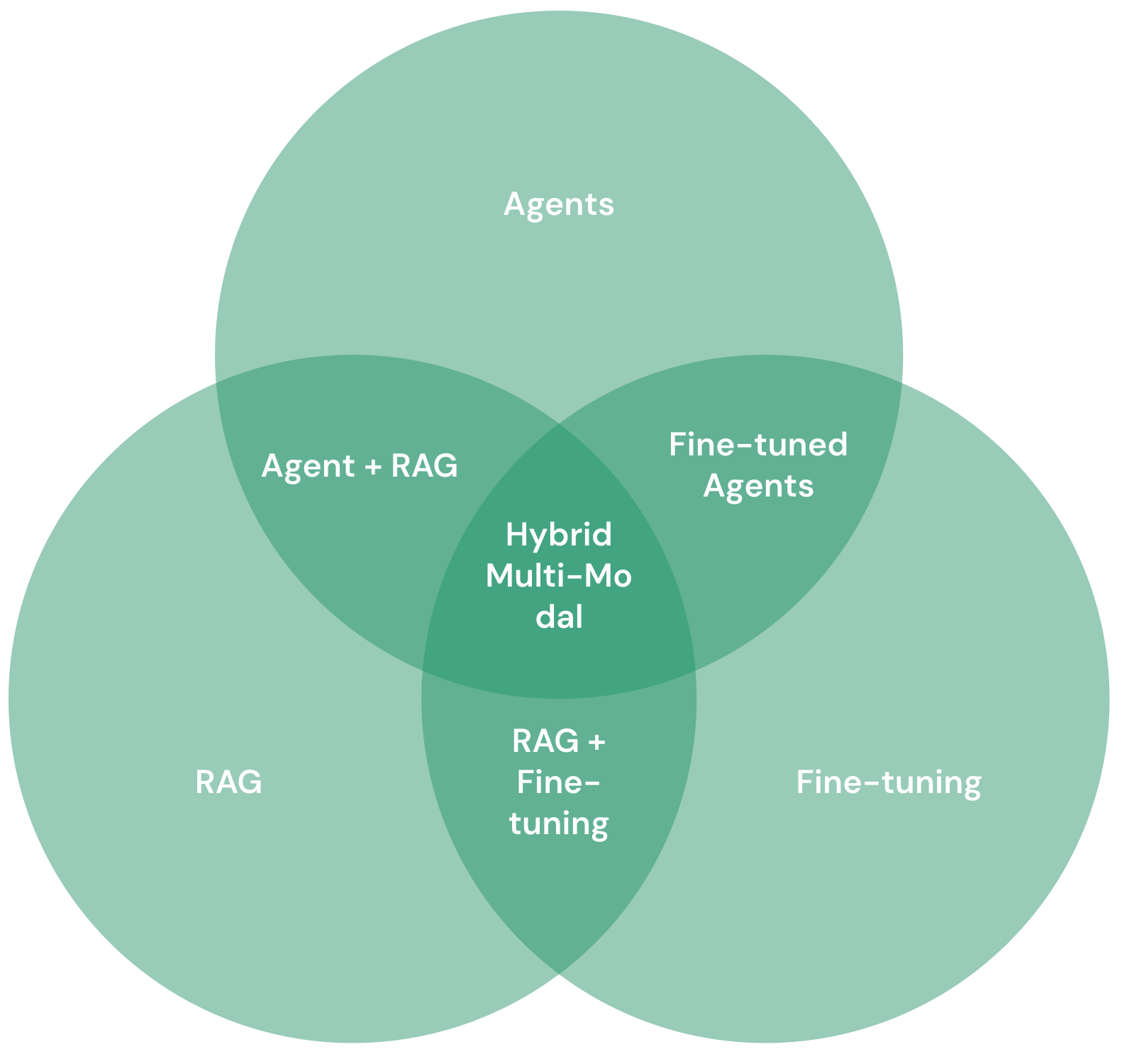

Hybrid Architecture Example: An enterprise knowledge system might use:

- RAG for dynamic information retrieval

- Fine-tuned models for consistent communication style

- Agents for workflow orchestration and user interaction management

Evolution Strategy: Start with the simplest approach that meets your requirements, then add complexity as you scale:

- Phase 1: Implement RAG for knowledge access

- Phase 2: Add fine-tuned components for style consistency

- Phase 3: Introduce agents for workflow automation

- Phase 4: Optimize the integrated system based on usage patterns

The Future is Multi-Modal

Often, you can customize a model by using both fine-tuning and RAG architecture. The leading organizations aren't choosing between RAG, fine-tuning, and agents – they're building sophisticated systems that leverage all three approaches, where each adds the most value.

Emerging Patterns:

- RAG + Fine-tuning: Vector databases for information retrieval with fine-tuned models for consistent response formatting

- Agent + RAG: Autonomous agents that dynamically retrieve information to inform decision-making

- Fine-tuned Agents: Specialized agents with domain-specific capabilities embedded through fine-tuning

Avoiding the Expensive Mistakes

The costliest AI architecture decisions share common patterns:

Mistake 1: Technology-First Thinking: Starting with "we need AI agents" instead of "we need to solve X business problem."

Mistake 2: Ignoring Information Lifecycle: Building fine-tuned models for rapidly changing information or RAG systems for stable domain knowledge.

Mistake 3: Underestimating Governance Overhead: Enterprises will invest in these solutions to navigate the complexity of known, changing, and new risks from AI. Agents especially require significant governance infrastructure.

Mistake 4: Single-Point Solutions: Forcing one approach to solve all problems instead of building integrated systems.

Conclusion: Embrace the Nuance

The question isn't whether RAG, fine-tuning, or agents is "better" – it's which approach serves your specific context most effectively. The most successful AI implementations combine approaches strategically, evolve architectures as requirements change, and resist the temptation of one-size-fits-all solutions.

Your competitive advantage won't come from picking the "right" AI architecture. It will come from building systems that adapt to your business requirements, scale with your growth, and evolve with your changing needs.

Stop fighting religious wars about AI approaches. Start building systems that work.

Ready to go deeper? Subscribe for upcoming deep dives on cost modeling frameworks, ROI measurement strategies, and hybrid architecture patterns that are reshaping enterprise AI implementations.

What's your experience? Have you seen successful hybrid implementations or costly architecture mistakes? Share your stories – the community learns from real-world lessons, not vendor pitches.